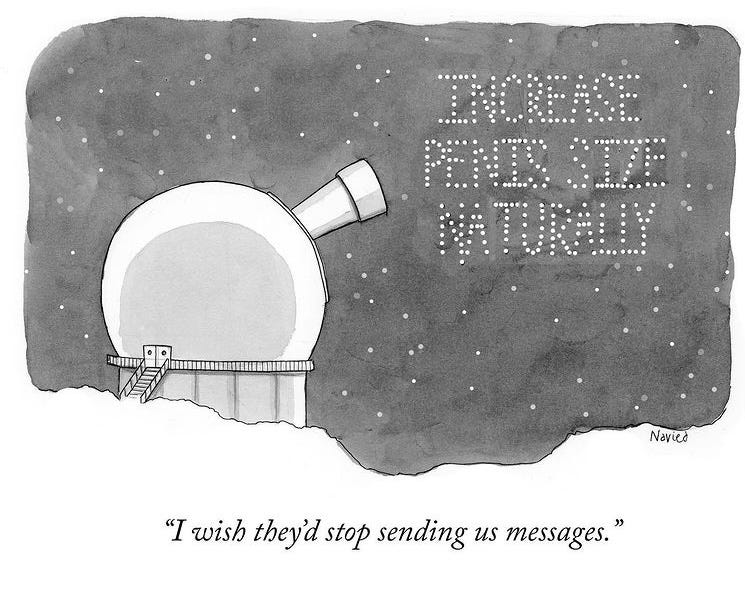

Enlarge your penis - Reflections on AI and context

Now that I have your attention...

Recently I was “talking” to a friendly neighborhood AI trying to get some insights on a Process Mining dataset, and became instantly frustrated with the answers it was providing me. A question about the value of a simple boolean attribute (true or false) was eluding my chosen LLM (for privacy reasons lets call it HAL 9000).

Since I am not a techie, I immediately asked one of my colleagues who automatically told me that I was not prompting it right and that these new toys need “Context” to be able to provide useful insights. Believe me (after researching common prompting practices and methodologies), I tried every prompt I could think of short of a Shakespeare play, and could not get a correct answer from HAL. So maybe, this was not just about “Context” and there was something more.

I was immediately reminded of the recommendation algorithms in content sites, which I must say, I have not had very good experiences with. Granted, it is not as extreme as the “enlarge your penis” material that I keep getting in my spam folder (and for which there is no training or “context” needed, other than send to: the whole male population), but many a recommendation from the youtube and even Netflix algorithm has felt that way.

So naturally I went back to my knowledgeable colleague to try and understand the nature of the problem. I was told that context is not just about how I queried HAL, but also about the material it had available and with which it had trained to address specific tasks. It turns out that LLMs are very good at dealing with language, but not so adept at understanding other types of data. In my case, poor old HAL didn't exactly know what and specifically where to find the data to answer my question because it was not strictly speaking, in language form. In other words it did not understand the “context”, and it would take more than a good prompt to solve this.

My takeaway from this, and I think a valid warning for anyone who places too high expectations on the powers of LLMs for this type of work is that you need to work with these models far more and beyond giving them simple written context or else some of the answers you get will feel as flat and generic as your everyday “enlarge your penis” spam.

Data to text context, maybe? But that is material for another post…

(*) The author of this post is a complete tech ignorant who has been generously allowed by his colleagues to vent some of his puzzlement and frustrations in this publication (humor him, they say). Therefore these posts which I will try to collect under a heading of “Conversations with HAL 9000” will try to add some perspective from the point of view of a simple business user. Don't hold it against him.

Genial John, Los LLM están subiendo en popularidad como la espuma, hasta que llegamos a su techo, nosotros seguimos fantaseando con sus posibilidades hasta que asumamos hasta donde llegan y quienes serán los encargados de explotarlos.

Probablemente sean contextos supervisados por una persona o no. Pero después del gran BOOM, se le empiezan a ver las costuras.

Esto no invalida que es un salta impresionante, pero quedan muchos más antes de que seamos ciudadanos de segunda. Con pene largo o corto para las personas de género masculino. y que el resto no se sientan afectados.